BulkUnsubscribe: Unsubscribe from all marketing emails in 1-click

I wrote this in January 2024, and have been sitting on it for a while.

I've decide not to host this service. While I have gotten through Google's CASA to get the scopes I need, they require it to be renewed every year, and AWS has decided that this is not allowed to be used for SES (for unexplainable reasons...). As a result a managed version without running my own SMTP will be hard to monetize or provide without extreme negative costs.

The code is available at https://github.com/danthegoodman1/BulkUnsubscribe. It's easy to self-host and run with dev OAuth credentials for use on your own account.

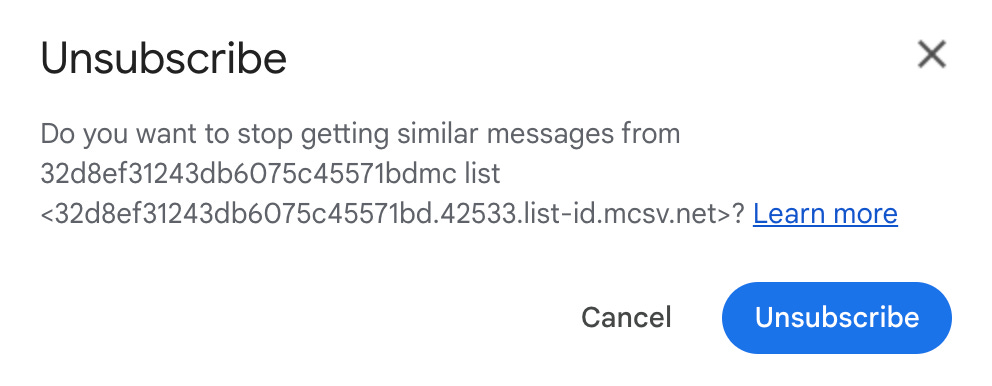

As of February 2024, Gmail and Yahoo require that bulk email sending includes the `List-Unsubscribe` header. This allows them to present an `Unsubscribe` button for easy unsubscribing from email lists.

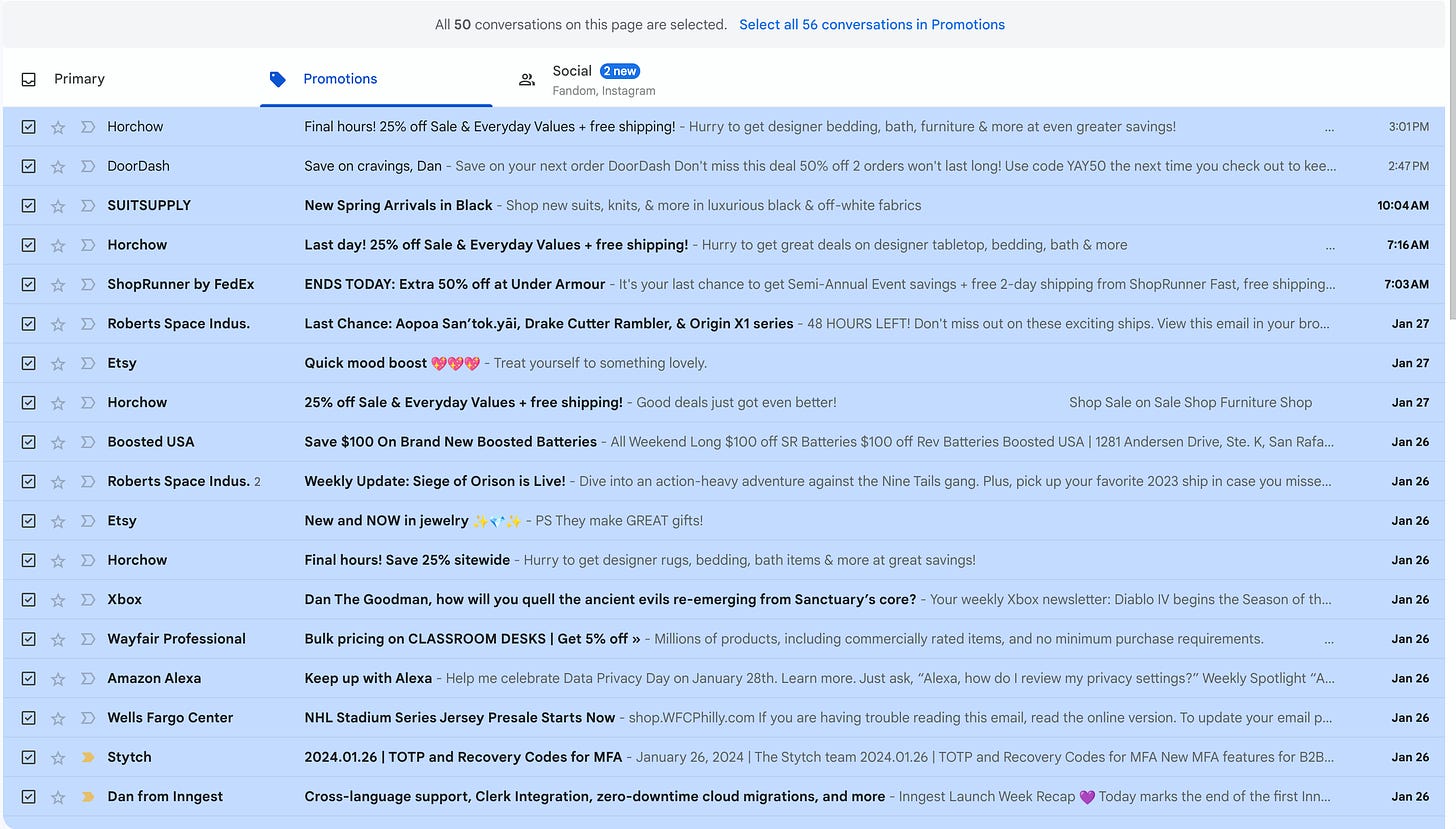

Odds are, after a few days your gmail looks like this:

How they got my email, most of them I don't know (or care).

What I do know is how to code, and now that Google (and others) have required a standard for unsubscribing, I can build something that does it all in one shot!

If you want to skip to the code, here is the repo.

Three ways to unsubscribe

It's not as simple as just going to the link in the header, there seem to be three ways that it can be handled.

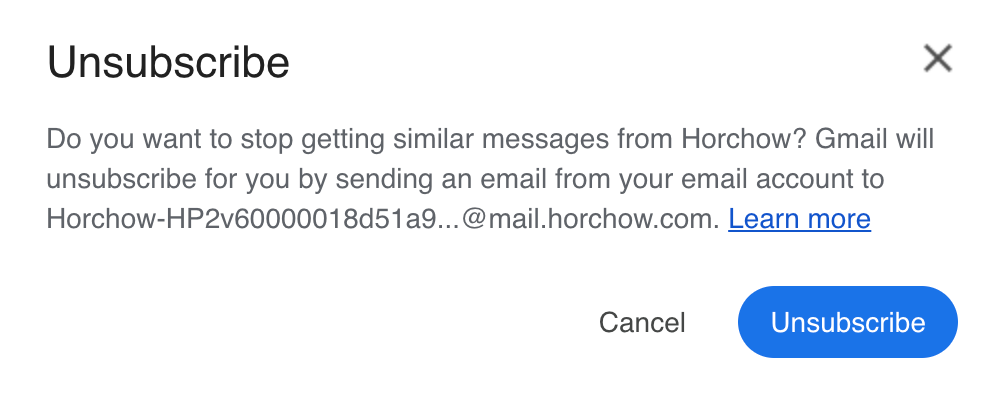

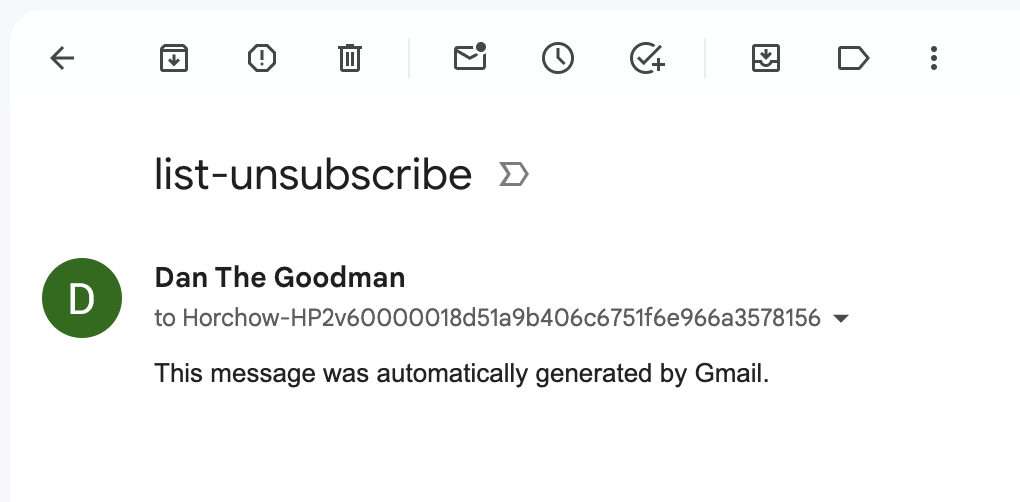

The `mailto` method:

What happens here is that Google sends an email to this special address for you. That triggers a removal of your subscription.

Here's what that email actually looks like:

The raw header looks like this:

List-Unsubscribe: <mailto:Horchow-

HP2v60000018d51a9b406c6751f6e966a3578156@mail.horchow.com?subject=list-

unsubscribe>It sent an email to the address in the header, with the provided subject. Seems like the content of the email does not matter.

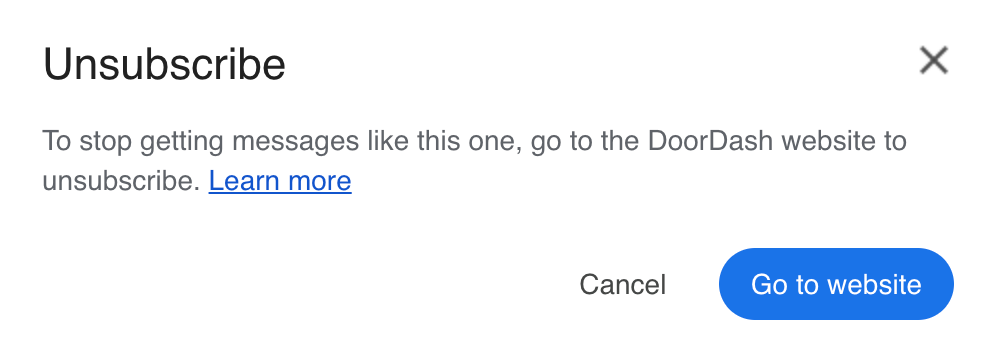

The link method

This takes you to a website to unsubscribe, providing that you do some action on the website. Since we really can't automate this easily, we'll ignore this one for now

The One-Click Post

This is the best option, it's a combination of two headers actually, `List-Unsubscribe` and `List-Unsubscribe-Post: List-Unsubscribe=One-Click`.

This tells the email client that just going to the link provided in the `List-Unsubscribe` header will unsubscribe, so it can make a headless request on your behalf without any navigation.

This is ideal. This is even easier to automate than sending emails.

All three methods can exist as the same time. For example a `List-Unsubscribe` header can have both a link and a `mailto` link, while also providing the `List-Unsubscribe-Post` header for one-click unsubscribing. The one-click takes the priority (at least in gmail).

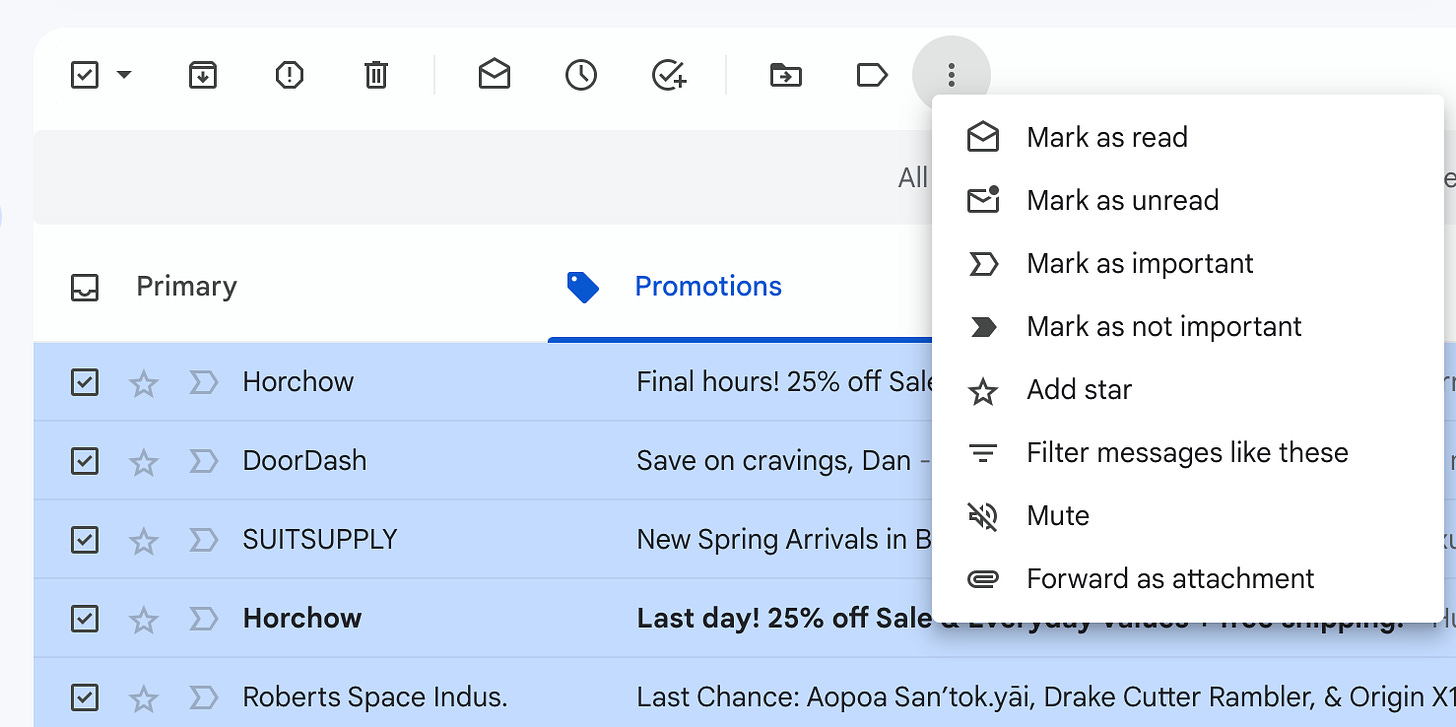

Weirdly enough, gmail does not provide a way to bulk unsubscribe from emails yet:

So we'll go ahead and build a tool to do that ourselves!

Building a tool to automate it

The first thing we have to do is go make a Google Cloud project and get some credentials to be able to use Google oauth for login to the app, as well as make sure we have the right scopes to be able to do the operations we need.

The asterisk here is the `mailto` unsubscribe variant: That requires we are able to send emails on behalf of the user.

Already, being able to read email metadata (only things like headers, but not the content) is considered a "Restricted Scope", so you can imagine the warnings that Google will throw if I ask to be able to send emails on behalf of the user. This restriction also means a more extensive review process before I can go live.

But then I had the epiphany: Can I send the unsubscribe email on their behalf?

Investigating some emails, I noticed that a `Message-ID` header shared the same value as the address of the `List-Unsubscribe` mailto address:

List-Unsubscribe: <mailto:Horchow-

HP2v60000018d4fff8e63aadd406e96c65220163@mail.horchow.com?subject=list-

unsubscribe>

Message-ID: <HP2v60000018d4fff8e63aadd406e96c65220163@mail.horchow.com>Similarly, in another email, I noticed that the URL pair to the mailto in the header had the same ID embedded in it:

List-Unsubscribe: <mailto:spamproc-p01@ca.fbl.en25.com?

subject=ListUnsub_1983452_7df27f2187f94c449d166920bc70024c>,

<http://app.comcastspectacor.com/e/u?

s=1983452&elq=7df27f2187f94c449d166920bc70024c&t=17>This indicated to me that the address was unique, meaning I could likely unsubscribe on their behalf! Massive victory! In fact it makes sense, as with forwarding and inbound-only addresses, senders can't guarantee that someone can send from a given address (e.g. noreply).

Google gave me the absolute hardest time getting this app approved.

They required using some arcane Java PWC scanner which claimed false CSRF vulnerabilities (doesn’t know about SameSite cookies…) and their alternative open source scanner had nix configuration errors.

As a result it took weeks to get this past Google’s review :(

Listing emails

The next thing to do is list emails as fast as possible, reading the headers and looking for unique values of `List-Unsubscribe`. Each unique value is probably a different mailing list.

We only want ones that either have the `mailto` value, or have the link and include the delightful `List-Unsubscribe-Post: List-Unsubscribe=One-Click` option.

It's simple to grab them with the `googleapis` package, but unfortunately we have to double-dip and get each item individually after we list because of our restricted scopes:

const unsubabble = (

await Promise.all(

messages.data.messages.map(async (msg) => {

const mcontent = await gmail.users.messages.get({

access_token: accessToken,

userId: "me",

id: msg.id!,

format: "metadata",

})

return mcontent

})

)

).filter((msg) =>

msg.data.payload?.headers?.some(

(header) => header.name === "List-Unsubscribe"

)

)The headers tell us everything we need to know like the sender from the `From` header (for listing only), and the `List-Unsubscribe` headers to figure out if and how we can unsubscribe.

With a bit of parsing we can get a good-looking array of objects to render:

{

Subject: 'Bulk pricing on CLASSROOM DESKS | Get 5% off »',

Sender: {

Email: 'editor@members.wayfair.com',

Name: 'Wayfair Professional'

},

MailTo: undefined,

OneClick: 'https://www.wayfair.com/v/account/email_subscriptions/unsubscribe?csnid=d1088d57-3467-46c0-ba00-ada1cef0f785&unsub_src=em_header&_emr=354abd98-58e0-4635-b5e3-e3dcc264996b&channel=1&wfcs=cs5&topic=4&refid=MKTEML_94210'

},

{

Subject: 'NHL Stadium Series Jersey Presale Starts Now',

Sender: {

Email: 'updates@events.comcastspectacor.com',

Name: 'Wells Fargo Center'

},

MailTo: 'spamproc-p01@ca.fbl.en25.com?subject=ListUnsub_1983452_7df27f2187f94c449d166920bc70024c',

OneClick: undefined

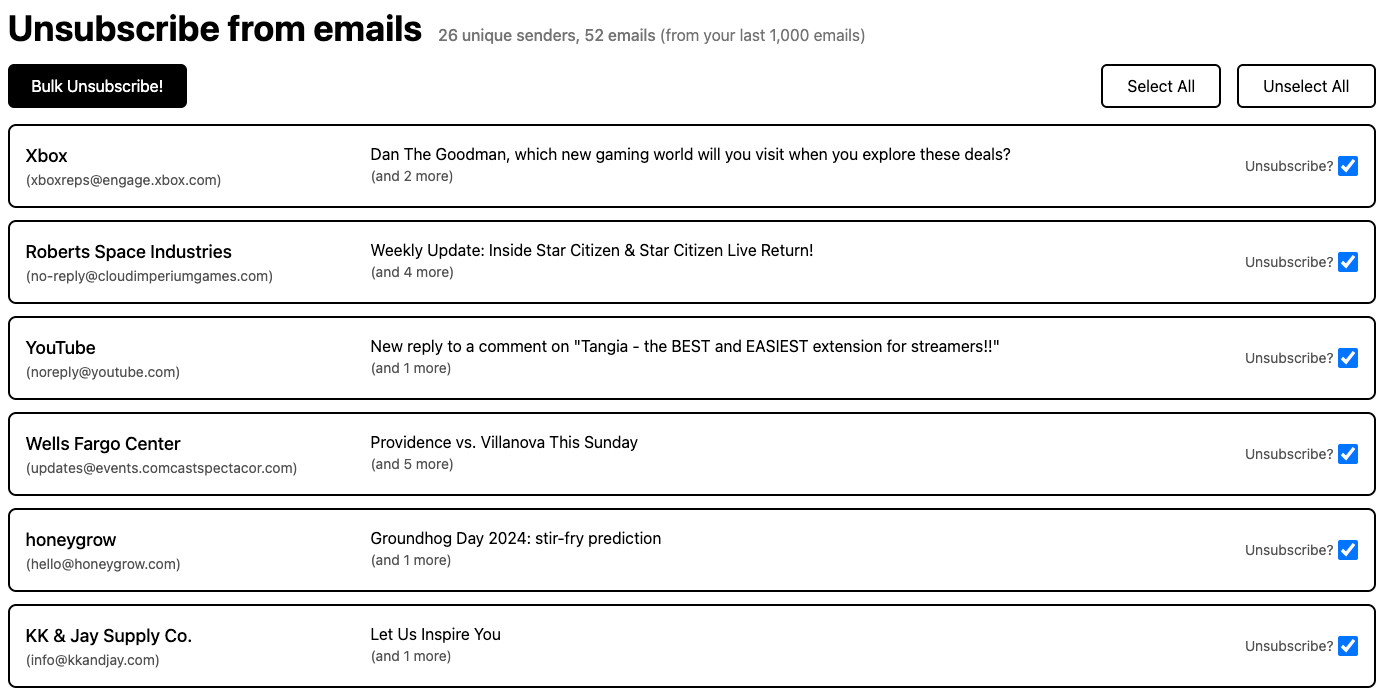

}Finally, we can show it on the UI for users to review. We'll take the first item for each unique Name & From email combo and show the first one, along with how many more match:

Out of only 100 email (kept hitting rate limits when testing with 1,000 😬) 52 of them were unsubscribe-able!

A 52% reduction in emails sounds awesome, even if gmail is automatically filing them away without notifications.

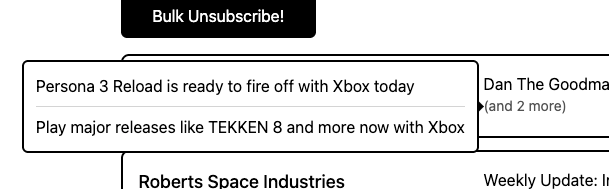

We'll also show the remaining subjects on hover:

Because it takes a while to list and query 1,000 emails (even with full concurrency 😅), we use Remix's deferred data loading to render a loading state (which I really like, except for the weird typing):

<Suspense fallback={<Loading />}>

<Await resolve={data.unsubabble}> {/* await a promise from the loader */}

{(u) => {

const unsubabble = u as ParsedEmail[] | undefined

// ...render rowsTo unsubscribe we need to do one of two things:

1. Send an email (mailto)

2. Make a request to an endpoint (One-Click)

We'll prioritize the One-Click if that exists, and if that is not an option, we will send an email on their behalf.

Because we don't store the information, we list emails synchronously in the browser session. However we don't want them to have to stick around while the unsubscribing is happening, so we need some form of durable execution.

Building durable execution

There are already a lot of great tools in the ecosystem for this: Temporal, go-worfklows, inngest, and various task queue packages. Unfortunately, they either require running another service, don't support SQLite, or don't support NodeJS. I also couldn't be bother to explore or trust someone else's code, so we'll have to make our own!

Because the actions we need to process are very well defined, we'll make simple concepts of Workflow and Tasks, where a single Workflow has multiple Tasks. We will also define all of the tasks to complete at workflow creation time, but nothing stops a task from appending new tasks to the workflow as needed. We'll create a table for listing `workflows` that have an ID and a status, and a table for `workflow_tasks` that has a simple sequence number (`seq`), `task_name` to complete, and `data` to pass into that task.

create table if not exists workflows (

id text not null,

name text not null, -- human friendly name

metadata text,

status text not null, -- pending, completed, failed

created_ms int8 not null,

updated_ms int8 not null,

primary key(id)

);

create table if not exists workflow_tasks (

workflow text not null,

task_name text not null,

seq int8 not null,

status text not null, -- pending, completed, failed

data text,

return text,

error text,

created_ms int8 not null,

updated_ms int8 not null,

primary key (workflow, seq)

);Task runners can be registered, which helps workflows execute tasks.

export interface TaskExecutionContext<Tin> {

workflowID: string

seq: number

data: Tin

attempt: number

wfMetadata: any | null

preparedData?: any

}

export interface TaskExecutionResult<Tout> {

data?: Tout

error?: Error

/**

* "task" aborts this task and continues the workflow.

* "workflow" aborts the entire workflow.

*/

abort?: "task" | "workflow"

}

export interface TaskRunner<Tin = any, Tout = any> {

Name: string

Execute(ctx: TaskExecutionContext<Tin>): Promise<TaskExecutionResult<Tout>>

Prepare?(ctx: TaskExecutionContext<Tin>): Promise<any | undefined>

}The `Prepare` method allows us to do something once when we (re)start executing the workflow, like getting a fresh access token. The result is never persisted.

On restart, we can list all workflows in the `pending` state, get the `workflow_task` that is not completed or failed with the smallest `seq` number, and continue processing. Once we reach the final task, we can mark the workflow as completed, which will halt any further processing of this workflow.

Executing workflows is pretty simple, we just spin on tasks as long as we can continue processing, `durable/workflow_runner.server.ts` :

let attempts = 0

while (true) {

attempts = 0

// Process the tasks

while (true) {

// Get the next task

const task = await db.get<WorkflowTaskRow>(`...`)

if (!task) {

wfLogger.info("no pending tasks remaining, completing")

return await this.updateWorkflowStatus(workflowID, "completed")

}

if (!this.taskRunners[task.task_name]) {

taskLogger.error("task name not found, aborting workflow (add task and reboot to recover workflow, or update task in db for next attempt)")

return

}

// prepare task if we haven't yet

if (

!prepared[task.task_name] &&

this.taskRunners[task.task_name].Prepare

) {

prepare[task.task_name] = await this.taskRunners[task.task_name]

.Prepare!({...})

}

// run the task

const result = await this.taskRunners[task.task_name].Execute({...})

if (result.error) {

if (result.abort === "workflow") {

// fail workflow and task

return // we are done processing, exit

}

if (

result.abort === "task" ||

result.error instanceof ExpectedError

) {

// fail task

break

}

// sleep and retry

await new Promise((r) => setTimeout(r, this.retryDelayMS))

attempts += 1

continue // keep spinning

}

// Completed

await this.updateTaskStatus(workflowID, task.seq, "completed",

{

data: result.data,

})

break

}

}Then in `entry.server.tsx` we can initialize and run recovery:

export const workflowRunner = new WorkflowRunner({

taskRunners: [new UnsubscribeRunner()],

retryDelayMS: 5000,

})

workflowRunner.recover()If the workflow gets shut down, then it will resume executing workflows when it reboots. We can test this by intentionally failing in a task:

[11:56:30.442] DEBUG (86600): executing task

workflowID: "fcf20724-a0fe-41e2-8ffd-0580b83220ab"

seq: 3

attempts: 0

aborting

# CRASH!!!!! comment out crash & restart server...

[11:56:44.527] DEBUG (86760): recovering workflows

[11:56:44.528] DEBUG (86760): recovered workflow

worfklowID: "fcf20724-a0fe-41e2-8ffd-0580b83220ab"

# See how the old workflow starts at task 3? Pretty cool!

[11:56:44.530] DEBUG (86760): executing task

workflowID: "fcf20724-a0fe-41e2-8ffd-0580b83220ab"

seq: 3

attempts: 0

...

[11:56:44.568] INFO (86760): workflow completed

workflowID: "fcf20724-a0fe-41e2-8ffd-0580b83220ab"Workflows run concurrently, and all tasks in a workflow run sequentially.

To make sure this doesn't grow unbound, both on server start and every hour, we delete any workflow or task rows that haven't been updated in the last 7 days. This currently full scans and logs how long it takes.

Oh dear... this is still going to be a lot of rows in SQLite... I'm sorry little one...

A note about privacy

As you can tell from the post so far and the open source code (same used in production), no sensitive data is stored. You can explore the privacy policy on the site, but TLDR the most that's kept is logs/operational data (durable execution state) of the target email and/or link, and maybe some headers if they aren't in an expected format.

Unsubscribing

Finally, we can unsubscribe from some emails!

The user will then submit their selection to an action, which will create a workflow with a task for each of the messages that match the selection. For security, users will only submit message ids, which we’ll refetch using their token to prevent abuse.

export async function action(args: ActionFunctionArgs) {

const user = await getAuthedUser(args.request)

if (!user) {

return redirect("/signin")

}

const formData = await args.request.formData()

const msgIDs = formData.getAll("msgID")

await workflowRunner.addWorkflow({

name: `Unsubscribe for ${user.email}`,

tasks: msgIDs.map((id) => {

return {

taskName: "unsubscribe",

data: {

id,

},

}

}),

})

Then each task execution will fetch the message from Google, re-parse, and unsubscribe:

const existing = await selectUnsubedMessage(userID, ctx.data?.id!)

if (existing) { // aborting, message already handled

return {}

}

const [parsed] = parseEmail([msg.data])

if (parsed.OneClick) {

// If One-Click, navigate to link

const res = await fetch(parsed.OneClick, {

method: "POST",

})

} else if (parsed.MailTo) {

// If email, send email

await sendEmail(

parsed.MailTo,

parsed.Subject!,

"Unsubscribe request sent via bulkunsubscribe.com"

)

} else {

throw new ExpectedError("no valid unsub action")

}

Emailing

I intended to use AWS SES because they don't have a high barrier to entry (e.g. $15/mo), and their unit costs are the lowest. But they decided that this “could have a negative impact on our service” 🙄.

This means we’ll have to use a more expensive provider, or host something ourselves on a hosting provider that let’s us use port 25.

Launch!

This stack will be deployed on a Hetzner VM, sitting behind cloudflare to manage TLS and any caching I might need. I'll add the `pino-axiom` log transport so logs get sent to axiom, and I can setup alerts there. I'll also add website monitoring to check if it's up.

Lastly, I'll add some code to keep an eye on disk usage and let me know if it gets too low:

const diskCheckLoop = setInterval(async () => {

const stats = await statfs(process.env.DISK_PATH || "/")

const totalSpace = stats.bsize * stats.blocks

const availableSpace = stats.bsize * stats.bfree

if (availableSpace < totalSpace * 0.15) {

// When 15% space left, notify

logger.error("less less than 15% disk space remaining!")

}

}, 30_000)We'll then throw it on HackerNews, ProductHunt, Bookface (YC's internal social media), and maybe even Reddit and see how it goes!

Recurring unsubscribe

Because this does not delete emails, it should be safe to run on an interval in the background with just selecting everything and unsubscribing (if that message ID hasn't been unsubscribed before).

I think this would be an excellent paid offering for something minuscule like $3/month.

I'll make a simple "get notified" button on the top nav after someone has unsubscribed to see if they want recurring. If enough people sign up, then we'll make it!